The Accidental Revolution

After 28 years around brand and technology messaging, I have strong feelings about how poorly the big AI companies have designed and marketed their consumer products. Kind of mind-boggling in their tone deafness, if you ask me. But it begins to make more sense when you realize the most important technology of the last 50 years wasn't a masterstroke of strategy. It wasn't a 4D-chess move by a genius CEO.

It was a total accident.

Rewind to November 2022. OpenAI wasn't a product company. They were a high-minded research lab, the "Bell Labs" of AI, founded on a mission to "save humanity" from Big Tech monopolies.

Their original business model was boringly practical. Be like the electric company. They just wanted to sit in the background and pipe raw intelligence out to other companies to use in their products.

At the time, they were building a promising new model, but they lacked real-world feedback to improve it. Using a process called Reinforcement Learning from Human Feedback (RLHF), they slapped a very basic chat interface on top of GPT-3.5 and released it as a "research preview".

No Super Bowl commercial. No keynote with a guy in a black turtleneck. Just a tweet and a blog post. They just wanted you and me to chat with the bot and tell it when it was being toxic, dumb, or weird, so they could scrub the model clean for resale to clients.

Internally, expectations were low, with engineers reportedly worried users would find it boring.

Then the internet happened.

In five days, they hit one million users. (It took Netflix three and a half years to do that.)

Suddenly, this slap-dash "research preview" became the main event. And in the process OpenAI accidentally crushed their own business plan. By releasing a free, powerful chatbot, they instantly made many of their paying partners (companies like Jasper that were reselling OpenAI's tech) obsolete.

They went from background utility provider to one of the most famous consumer brands on earth in a week. Two months later: 100 million users. Today, it's just shy of a billion.

The chaos rippled outward. Google, who actually invented the tech behind ChatGPT but was too afraid to release it, hit the panic button. Management declared a "Code Red," dragging founders Larry Page and Sergey Brin out of retirement. They rushed their own bot, Bard (any OGs remember that?), out the door so fast that a factual error in the very first demo tanked their stock by $100 billion.

Why does this history lesson matter to you?

Because it explains why no one ever took the time to create a user interface that truly fit the tool, or a proper campaign to teach customers how to actually use it. Once the fuse was lit, the arms race for market dominance was on. Marketing and PR departments be damned.

Meanwhile, we users did what felt... normal. We looked at the text box staring back at us and typed the way we'd always typed.

Keywords. Fragments. The shorthand that Google trained us to use for thirty years.

Best Italian restaurant downtown.

How to remove red wine stain.

Top 10 things to do in Costa Rica.

Command. Response. Walk away.

And for a lot of people, that was it. They "tried AI", got something generic or convoluted, shrugged, and moved on. "I don't get what the big deal is."

Right now, most of those billion users' AI chat histories are indistinguishable from their Google search histories. Same compressed queries. Same transaction-style interactions. Same expectation that the machine will figure out what you meant from the precious few words you gave it.

That's not a personal failing. It's thirty years of muscle memory working in the absence of any consumer guidance.

Conor Grennan, NYU's Chief AI Architect, calls this "the Google reflex." Your brain sees a text box and pattern-matches to every search box you've ever used. The neural pathways fire before you even think about it. Type less. Click fast. Move on.

That reflex made sense for Google search. The algorithm was doing the heavy lifting, matching your keywords to billions of indexed pages. Your job was to be terse and let the machine sort it out.

But AI doesn't work that way.

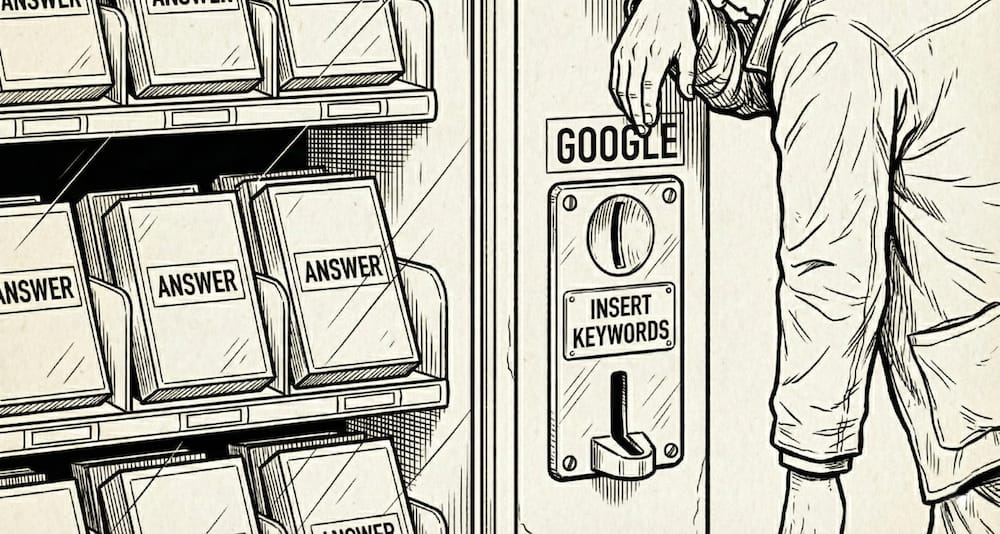

Think of Google search as a vending machine.

You feel that nagging hunger for a little info snack, you drop in your query, and pull out the answer. And, of course, "exact change" makes the transaction faster.

Type less. Click fast. Move on.

Because Google search IS a sophisticated vending machine.

But AI is more like having a private chef. If you just walk in and shout "food," there's a good chance you'll get something you hate. But if you take the time to explain your dietary needs, the occasion, and the ingredients you have on hand, you'll likely come away much more satisfied.

Same with AI. Shout "What's cool to do in Japan?" and you're going to get something generic. Technically correct. Definitely forgettable.

But what if you said:

"I'm planning a trip to Japan with my husband. We've got 10 days in March. We want historical sights, but also modern nightlife. We have mobility issues that limit the amount of walking we can do in a day. We want comfortable accommodations, but we don't need a resort either. What would you suggest?"

Now you're having a conversation. Now there's something to work with.

The difference isn't that you learned a trick. The difference is you stopped compressing.

The people who find AI genuinely useful aren't focused on magic prompt structures or secret techniques. They just stopped treating AI like a search engine and started treating it like a conversation. They stopped compressing and started briefing.

If you've ever delegated work, you've done this. Written instructions for a house-sitter. Briefed a contractor. Handed off a project before your vacation. This is the same thing AI is asking you to do.

Google trained you to be stingy with the chat. AI wants you to be verbose.

Why ChatGPT Users Go Back to Google (article) Gener8: Data reveals a "digital handshake". People use AI for synthesis, then return to Google to verify. The flip is still in progress.

How AI Is Changing Search Behaviors (article) Nielsen Norman Group: UX research on how generative AI is reshaping search and why "long-standing habits" are the biggest obstacle.

The Effect of New Technologies on English (Video) Linguist David Crystal on how technology has always changed how we communicate, and why the panic is usually wrong.

How Bell Labs Became the Epicenter of 20th-Century Innovation (podcast) Marketplace: The original "Bell Labs of AI". How a research lab accidentally invented the future. Sound familiar?

The Goldfish in the Machine

If you've ever been mid-conversation with AI when it suddenly "forgot" something you told it three messages ago, you're not imagining things.

That's real. And it's going to keep happening.

But here's the thing: that amnesia isn't a flaw to work around. Once you understand why it happens, you'll recognize something familiar. You've been solving this problem your whole life.

Every AI conversation has a context window, a finite amount of information the AI can "see" at any given moment. And the AI only knows what it can see.

Picture an AI chat like this: Let's say you tape a long scroll of paper to your wall from floor to ceiling. Now imagine you set a piece of glass over the bottom foot of that scroll. The AI can only see what's visible through the glass. That is its context window. So you start writing at the bottom of the paper, the AI adds its replies above your prompt, then you add some documents on top of that, maybe a couple of images. Before too long the area beneath the glass is filled. If you want the AI to see new stuff, you have to start moving the glass up the wall. The result is that the earliest stuff you wrote, including those documents you gave it as reference, starts to fall out of view to make room for the new stuff.

When that happens, that information doesn't get fuzzy. It ceases to exist. The AI isn't "forgetting" the way you forget where you put your keys. It literally cannot see that content anymore. As far as it's concerned, it was never there.

This explains why long conversations drift. Why AI starts contradicting things it said earlier. Why it "forgets" the constraint you set at the beginning. The context scrolled out. But it can be deceptive because it is likely the topic of those early prompts and reference docs still exists in the window, but its understanding is now incomplete. This explains that experience when it still appears to know what you are asking about, but gives you terrible answers, or worse, tries to fill in the knowledge gaps using the remaining fragments. The result are those confident ‘lies’ you’ve heard about or experienced. The ‘hallucinations’ we hear about.

But you've solved this before.

Think about every time you've had to bring someone up to speed who wasn't in the room.

The colleague who missed the meeting. Explaining a movie plot to someone who walked in twenty minutes late. Telling a story at a dinner party where nobody knows the cast of characters.

All of those moments required the same thing: calibrating context for someone starting cold. You instinctively know what they need to know. You sense when you've given too little (they're lost) or too much (their eyes glaze). You adjust.

AI is just... always the person who walked in late. A super genius with the short-term memory of a goldfish.

Assume every new conversation starts cold. Yesterday's chat doesn't exist. Last week's breakthrough? Gone. To AI, you're a stranger every time.

This feels like a limitation.

In some ways it is. But it's also making something visible that's always been true about communication.

Ever fired off an email you thought was perfectly clear, only to get a reply that proved it wasn't?

Ever had a team project go sideways because assumptions weren't made explicit? Ever made plans with friends that blew up because key details weren't agreed upon to start with?

That friction was always there. It just took longer to surface. Miscommunication between humans can fester for weeks before the gap becomes obvious. With AI, you find out in about three seconds.

The context window isn't just a constraint. It's a mirror.

Here's where AI diverges from the humans you're used to.

When you bring a colleague up to speed on a project, or text a friend the plan details they easily carry that context forward.

AI doesn't work that way. No matter how well you set context at the start, the window will scroll. The briefing you gave at message five may be gone by message forty. Not because you did anything wrong, but because the medium has a hard ceiling.

This is why understanding the mechanics matters. It's not enough to be good at context-setting. You need to be good at context management. That is the term of art for this practice in AI parlance.

So how do you manage context?

Mostly what you'd naturally do when talking to someone, with a few adaptations for the scrolling window.

When your spouse or friend clearly doesn’t remember the details you told them last week, you remind them. I’m sure that, like me, you very patiently re-explain those missing details so they can regain their full context of the situation (Cut to: knowing wink at my wife as she rolls her eyes here).

When you switch topics, start fresh. You wouldn't walk up to the new guy at work in the middle of an explanation and expect them to follow. Same principle: new topic, new chat.

When something matters, say it early. If you're filling in a friend before introducing them to your new date, you don't bury the fact they just got out of prison at the end. Same here: what AI needs to know most goes up front, where it stays visible longest.

When the conversation drifts, reset. "Let me back up and explain where we are." That instinct is correct. If you're deep into a complex project and AI starts losing the thread, pause. Ask the AI to give you a comprehensive summary of the ground you’ve covered so far. That output will easily reveal what’s gotten lost. Take that summary and use it to start a new chat.

Watch document overload. If your chat includes uploading multiple files, each one pushes earlier material up the scroll. For large projects, work in batches: upload a few documents, extract what you need into summaries, start fresh, repeat.

Most AI frustrations come from expecting it to behave more human.

People expect long conversations to get better over time, like building rapport with a colleague. They expect that when they say "based on everything we've discussed," the AI actually has access to everything they've discussed.

Sometimes it does. Often it doesn't.

The communication instincts you've built (reading your audience, calibrating context, knowing when to back up and re-establish common ground) transfer directly. Trust them.

## Try This [Decompression & Handoff]

# The Decompression:

You're going to run the same task twice. Feel the difference.

Chat 1: The Sticky-Note Brief

Open your AI tool. Type something you'd type into Google:

"Write me a bio for my website"

Note what you get.

Chat 2: The Full Briefing

New chat. Same task. But this time, brief it like you'd brief a contractor:

"I need a professional bio for my consulting website. I'm a [your field] with [X years] experience. My audience is [who]. Tone should be [approachable/authoritative/etc]. Include [specific things]. Keep it under 150 words."

Don't be afraid to give it notes after the first pass.

The diagnostic: Compare the outputs. Which one felt like you were managing the result?

That management feeling is the skill. The Google reflex fights it. Practice expands it.

# The Handoff:

When you have been chatting for a while and you sense you may start experiencing some drift, let the AI help you bridge the gap. Try a prompt that goes something like this:

"Summarize this conversation as a handoff prompt for a fresh chat. Include the goal, key context, current status, a list of files to re-upload, and any other details that will help provide a seamless transition."

Then copy/paste the output to a fresh chat.

The diagnostic: When you paste the output prompt into the new chat, end by asking it to summarize its understanding of the material.

You will immediately know if there are additional gaps to fill after successfully transplanting the wizened old goldfish brain into the new clean one.Exit Through The Gift Shop:

You've been briefing, explaining, delegating and convincing friends, family and stakeholders for decades. Anticipating what they need to know and what they'll probably screw up if you don't tell them first. In other words, managing.

Well, guess what? AI makes everyone a manager.

The Skills Translation Workbook ($29) is a guided self-assessment that reveals where the things you already do can make AI work for you.

Takes about an hour. When you're done, you will:

Get a clear diagnosis of your AI readiness level

See where the skills you already have map the strongest

Learn your recommended next steps toward AI fluency

You can choose to share your results with us or not. If you opt-in, you'll get 10% off our Custom Learning Path where we help you build a structured AI adoption plan for your actual situation.

Quote to Steal:

"If it looked like C3PO, you would never say 'Hey, give me the top 10 things to do in Costa Rica.' You'd have a conversation."

Thanks for reading,

-Ep

Miss any past issues? Find them here: CTRL-ALT-ADAPT Archive

Know someone still Googling their AI? Forward this. Help them decompress.

Did this newsletter find you? If you liked what you read and want to join the conversation, CTRL-ALT-ADAPT is a weekly newsletter for experienced professionals navigating AI without the hype. Subscribe here —>latchkey.ai